The First Helium Leak Detectors

The genesis for the use of helium as a method for leak detection can be traced back to the 1940’s and the Manhattan Project. The first atomic bomb created used uranium isotope 235, which is taken by way of separation from uranium-238. The separation was accomplished in a huge “diffusion” plant using microporous tubing as the diffusion medium and this process needed to be done in a manner that prevented any trace of moist ambient air in the process chambers. In essence, it was imperative that all the equipment be free of any leaks. Equipment of this size and magnitude had never before been tested to such and extreme leak detection specification. A number of various leak detection devices were tried, and they all proved unsuccessful as they could not meet the required standard for sensitivity. Eventually, a simplified mass spectrometer based on the Nier 60 spectrometer tube was chosen for leak detection and helium was the gas of choice used with it. It was determined that helium flow as sensitive as 10−6 std cm3 could easily be detected.

Major Improvements in Helium Leak Detection

In the 70+ years since the inception of the Manhattan Project, helium leak detectors have understandably been drastically improved. The size of an actual helium detector that in 1945 required a large scale, multi-story warehouse building, can now fit on a standard laboratory benchtop and the level of detection has been improved to levels that meet or exceed flows rates of 10−10–10−11 std cm3. With the inception of computers, operation of a helium detector has been fully automated. Based upon these developments, the use of helium as a medium for leak detection has become common and wide-spread practice and thus has a presence in almost every conceivable industry from refrigeration, semi-conductors, automotive and food and drug packaging components.

Modes of Operations using Helium Leak Detection

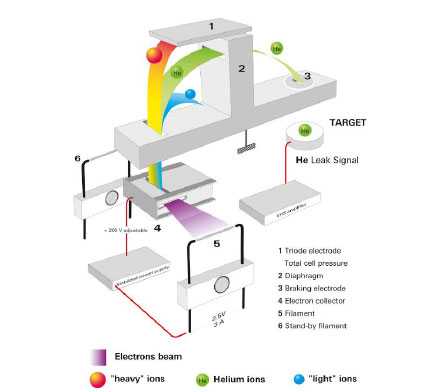

Conceptually, the principle of operations has not changed much in the past 50 years although, as noted, the size has been drastically reduced. The central piece of the helium leak detector is the cell in which the residual gas is ionised and the resulting ions accelerated and filtered in a mass spectrometer. Most of the current detectors use, as in the original design, a magnetic sector to separate the helium ions from the other gases. Permanent magnets are generally used to generate the magnetic field. The adjustment needed for the selection of the helium peak is made by varying the ion energy. At the highest sensitivity range, currents as low as femtoamperes have to be measured. This is achieved with the use of an electron multiplier in the most modern detectors. If the cell of a leak detector is not much different from the original design, the pumping system has considerably changed with the original diffusion pumps now being replaced by turbomolecular pumps or dry molecular-drag pumps. The sensitivity of the helium leak detector is given by the ratio between the helium flow through the leak and the partial pressure increase in the cell. In order to increase the sensitivity, the pumping speed of the tracer gas has to be reduced. This must be done without diminishing the pumping speed for the other gases (mainly water as leak detection usually takes place in unbaked systems) in order to keep the appropriate operating pressure for the filament emitting the ionising electrons. Selective pumping is therefore needed to provide a high pumping speed for water and a low pumping speed for helium

We hope that you have learned something regarding the history of helium leak detectors. In future installments, we will address various test methods and case studies that will provide more specific insight into the use of helium applied to the package leak testing needs of the pharmaceutical and life sciences industries.

helium leak detection, helium leak detector, operations using helium leak detection

4299